Table of Contents

Introduction

NVIDIA first announced their GeForce RTX 40 Series GPUs in October 2022, including an RTX 4090 model and a pair of RTX 4080 12GB and 16GB models. They have since re-branded the RTX 4080 12GB as the RTX 4070 Ti 12GB, and have been releasing these GPUs one by one over the past few months. The RTX 4070 Ti became available recently, completing their trio of GeForce RTX 40 Series GPUs. While lower-end GPUs are likely to come in the future, this is a good time for us to do in-depth testing to see how these 40 series cards perform in various content creation workflows.

In this article, we will be looking at the Topaz AI suite with many of their applications, including Topaz Gigapixel, Sharpen, DeNoise, and Video AI. As a comparison, we will include the full lineup from the previous generation GeForce RTX 30 Series, the GeForce RTX 2080 Ti for additional context, and a sampling of cards from their primary competition: the AMD Radeon RX Series.

If you want to read more about the new GeForce RTX 40 Series cards (including the 4070 Ti, 4080, and 4090) and what sets them apart from the previous generation, we recommend checking out our main NVIDIA GeForce 40 Series vs AMD Radeon 7000 for Content Creation article. That post includes more detailed information on the GPU specifications, testing results for various other applications, and the complete test setup details for the hardware and software used in our testing.

Testing Methodology

The Topaz AI suite is still fairly new to our standard set of GPU and CPU benchmarks, and since our initial set of testing in the Topaz AI: CPU & GPU Performance Analysis article, we have made some tweaks to our process.

Big thank you to Shotkit for providing a terrific resource for anyone to download and examine RAW photos from various cameras!

Previously, for the photo-based applications, we were separating the different photo formats between .CR2, .NEF, .ARW, and .RAF. After we had all the results of that testing, however, we found that while the RAW format can affect performance, the photo’s composition can make just as much of a difference. Since separating the testing that way added a significant amount of testing time, we decided to switch to a single set of tests for Gigapixel, DeNoise, and Sharpen AI, and log the total time it took to process all the images when using the “Auto” settings. We then converted that time in seconds into a “Images per Minute” metric as that is easier to handle from a scoring perspective since it maintains a standard “higher is better” methodology.

As a part of combining all the RAW formats, we also trimmed down the tests from 24 images in total to 12. We made sure to pick images with a range of subjects and compositions to maintain a well-rounded data set without affecting the overall results significantly. Still, this change allowed the benchmarks to complete much faster.

All tests were run in GPU mode on the primary GPU with graphics memory consumption set to “High”. You can also run each application in CPU mode, but that is rarely done and does not provide any benefit from what we could tell. In total, the images we used and the AI model selected include:

| Photo from Shotkit | Gigapixel AI (4x Scale) | DeNoise AI | Sharpen AI |

|---|---|---|---|

| Canon-5DMarkII_Shotkit.CR2 | Standard | Low Light | Motion Blur – Very Noisy |

| Canon-5DMarkII_Shotkit-2.CR2 | Standard | Low Light | Standard |

| Canon-6D-Shotkit-11.CR2 | Standard | Low Light | Standard |

| Nikon-D4-Shotkit-2.NEF | Standard | RAW | Motion Blur – Very Blurry |

| Nikon-D750-Shotkit-2.NEF | Standard | RAW | Standard |

| Nikon-D3500-Shotkit-4.NEF | Standard | RAW | Out of Focus – Very Noisy |

| Sony-a7c-Shotkit.ARW | Standard | RAW | Out of Focus – Very Noisy |

| Sony-a7c-Shotkit-3.ARW | Standard | RAW | Out of Focus – Very Noisy |

| Sony-RX100Mark6-Shotkit-2.ARW | Standard | RAW | Motion Blur – Very Noisy |

| Fujifilm-X100F-Shotkit-5.RAF | Low Res | Low Light | Out of Focus – Very Noisy |

| Fujifilm-XH1-Shotkit-5.RAF | Standard | Low Light | Motion Blur – Normal |

| Fujifilm-GFX-50S-Shotkit.RAF | Standard | RAW | Motion Blur – Very Blurry |

For Topaz Video AI, the tests are unchanged from that first article and include the following tests:

| Preset | Source Video | AI Model |

|---|---|---|

| 4x slow motion | H.264 4:2:0 8bit 1080p 59.94FPS | Chronos |

| Deinterlace footage and upscale to HD | AVI 4:2:2 8bit 720×470 29.97FPS Interlaced | Dione: DV 2x FPS |

| Upscale to 4K | H.264 4:2:0 8bit 1080p 59.94FPS | Proteus |

Raw Benchmark Results

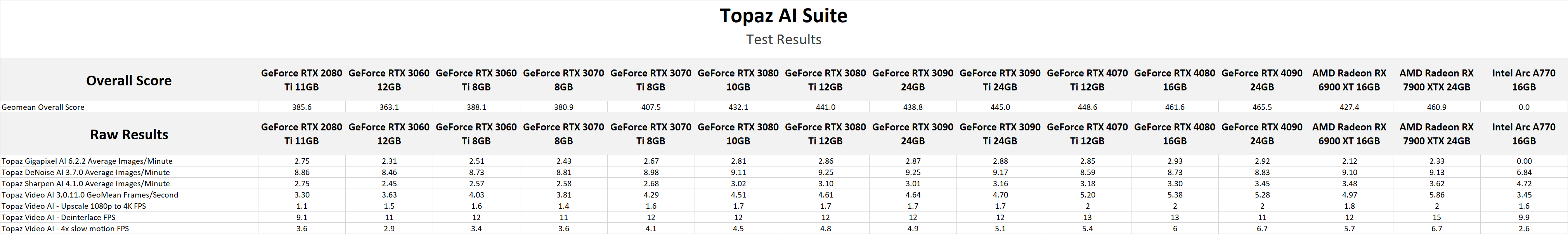

While we will be going through our testing in detail in the next section, we always like to provide the raw results for those that want to dig into the details. If there is a specific task you tend to perform in your workflow, examining the raw results will be much more applicable than our more general analysis.

Overall Topaz AI Performance Analysis

The Topaz AI suite includes several applications, and for our testing, we are currently focusing on Video AI, Gigapixel AI, DeNoise AI, and Sharpen AI.

While Topaz AI is not considered a beta application, we want to note that Topaz Labs is still putting a lot of work into these applications, and performance can change from month to month or even week to week. In fact, since we began the testing for this article, there have been three updates to Video AI – including changes specifically to improve performance on NVIDIA GPUs with TensorRT cores. In addition, we have been seeing issues with Gigapixel AI crashing with Intel Arc GPUs (which is why the Arc A770 has no result in the Overall and Gigapixel charts), but there has since been an update specifically to reduce crashes on Intel GPUs.

Unfortunately, we can’t re-do all of our GPU testing every time there is an update because the update frequency is so frequent that we would constantly be re-starting the testing and never get to the point of actually publishing the results. We mention this to clarify that while these results are accurate for the versions we are currently testing, performance and stability will likely change the further you get from the specific versions of these applications we tested. With that said, the specific versions used for this testing are:

- Topaz Video AI 3.0.11.0

- Topaz Gigapixel AI 6.2.2

- Topaz DeNoise AI 3.7.0

- Topaz Sharpen AI 4.1.0

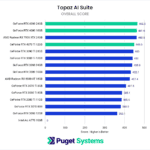

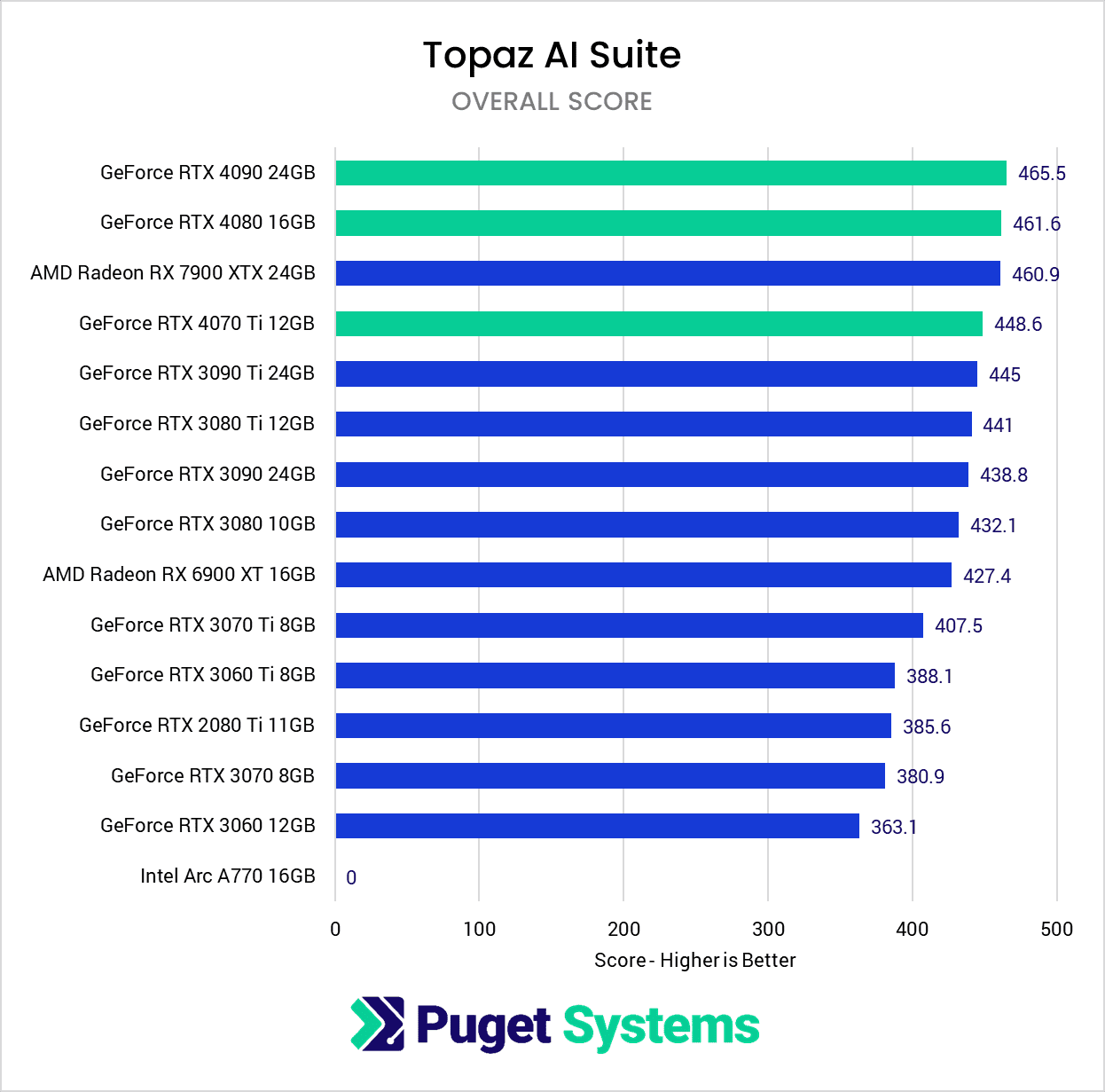

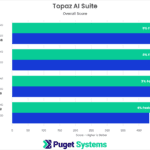

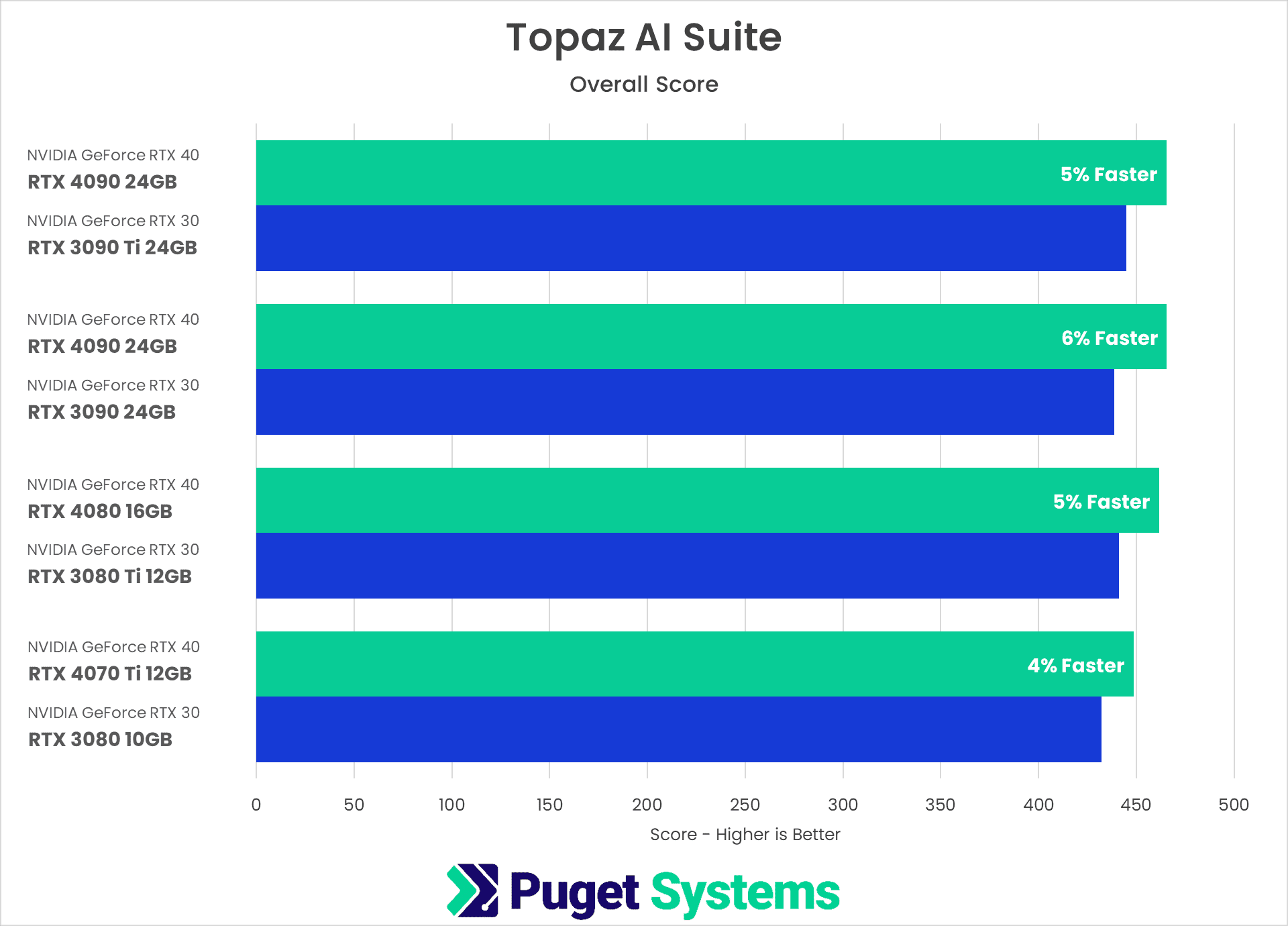

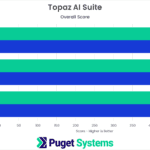

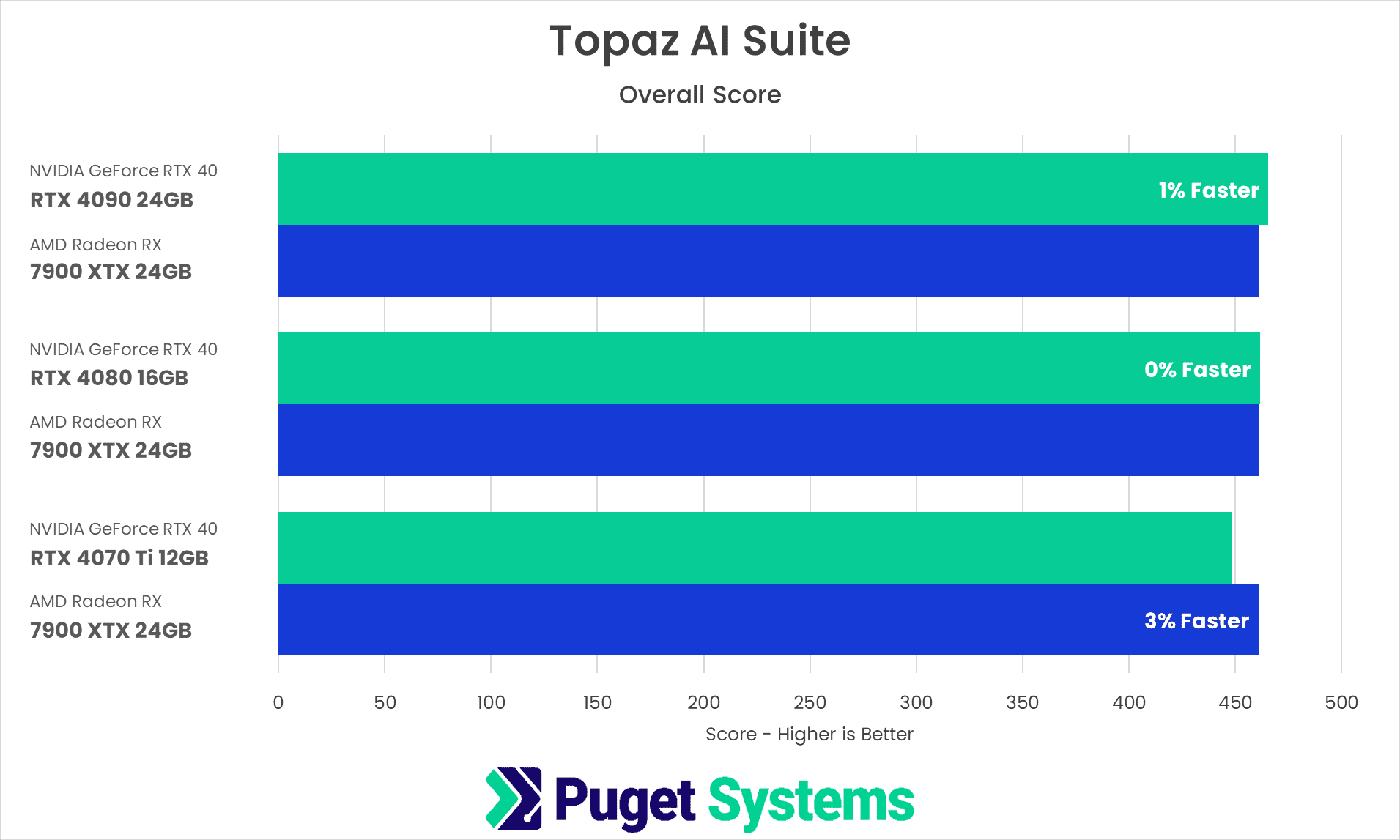

In the charts above, we are starting with a single “Overall Score” which is the geometric mean of the scores for each application. We usually like to use a single number like this to give a very broad overview of how different hardware performs, but for the Topaz Suite, performance can change dramatically based on the individual application, so we highly recommend looking at the individual application results if you use any of these applications.

A lot is going on in the charts above, so what we are going to do is to break down the results based on the two main questions the majority of readers are likely to be interested in: How does the RTX 40 Series compare to the previous generation RTX 30 Series, and how they compare to their primary competition from AMD.

NVIDIA GeForce RTX 40 Series vs RTX 30 Series

We usually like to make comparisons based on the pricing of each GPU, but it is worth noting that GPU pricing is still extremely volatile. We will use the base MSRP as a baseline in this post, but be aware that exact apples-to-apples comparisons may change according to current market pricing. But, in terms of MSRP, the closest comparisons between the new RTX 40 Series cards and the previous generation are:

- RTX 4090 24GB vs RTX 3090 24GB ($1,599 vs $1,499)

- RTX 4080 16GB vs RTX 3080 Ti 12GB ($1,199 vs $1,199)

- RTX 4070 Ti vs RTX 3080 10GB ($799 vs $699)

In addition, we also decided to throw in the RTX 4090 24GB versus the RTX 3090 Ti 24GB ($1,599 vs $1,999) as a “best of both generations” comparison.

Starting with the Overall Score, the new RTX 40 Series GPUs only come in at a small ~5% faster than the previous generation. Despite Topaz AI often being touted as a heavy GPU workload, we have found that that only applies to the actual AI processing part of the process. Tangental tasks like opening the image/video, choosing the AI model, and exporting the final result are all primarily CPU-based. And depending on the relative speed of your CPU and GPU, that can mean that for a significant amount of the total process, the GPU may not doing all that much.

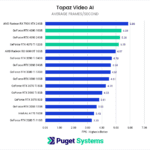

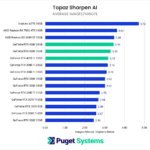

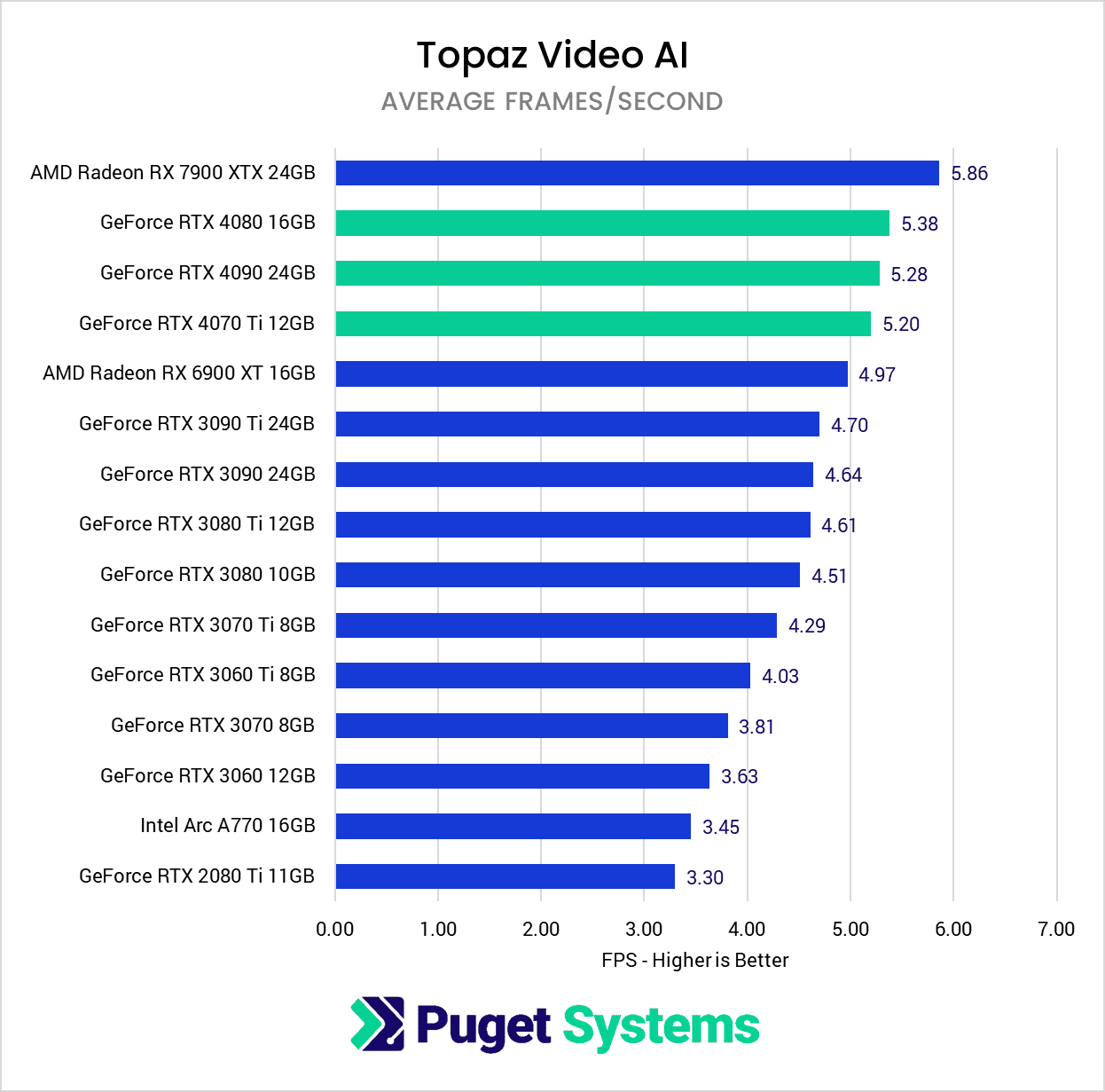

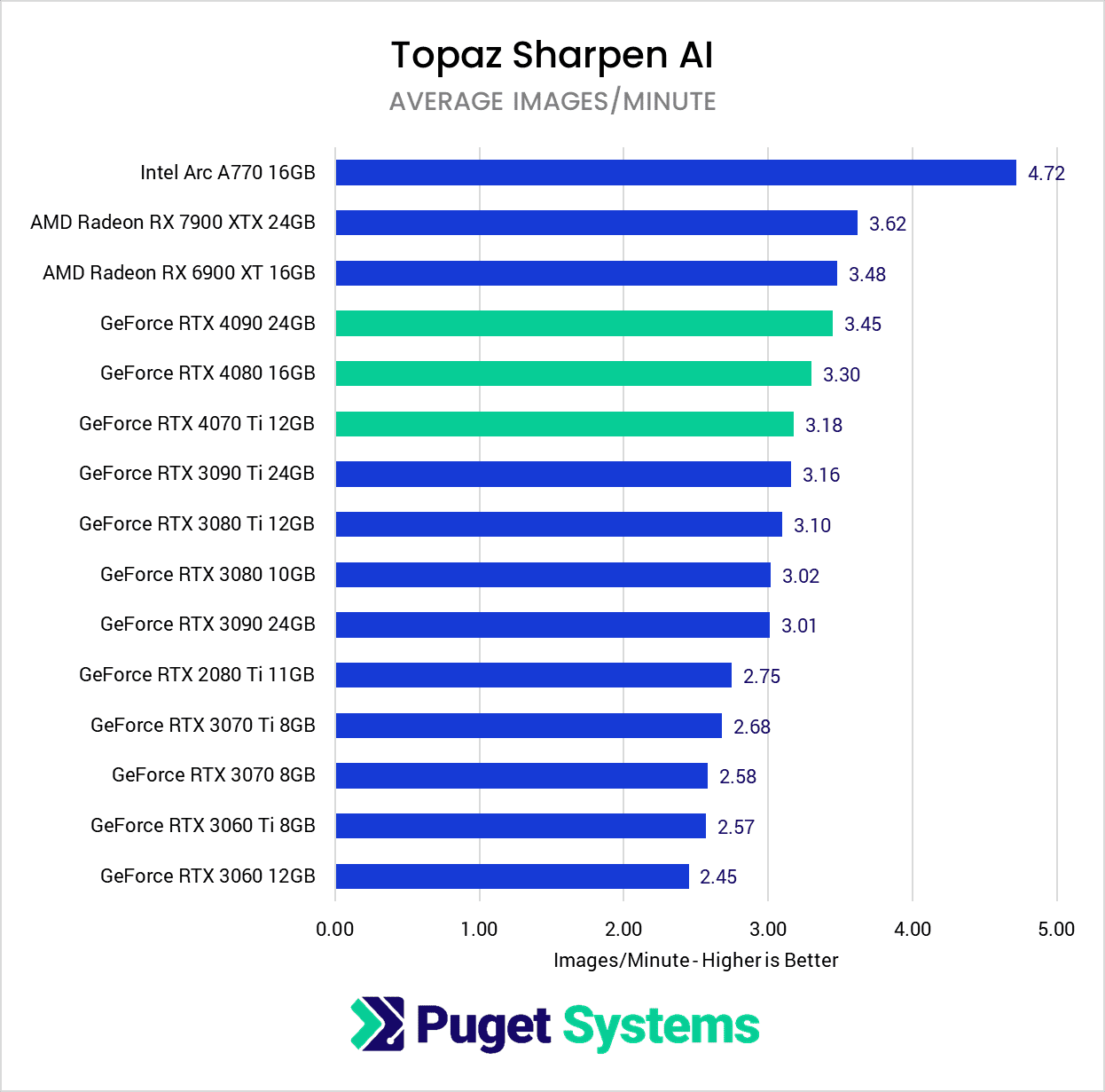

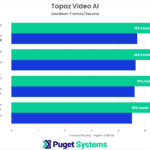

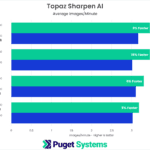

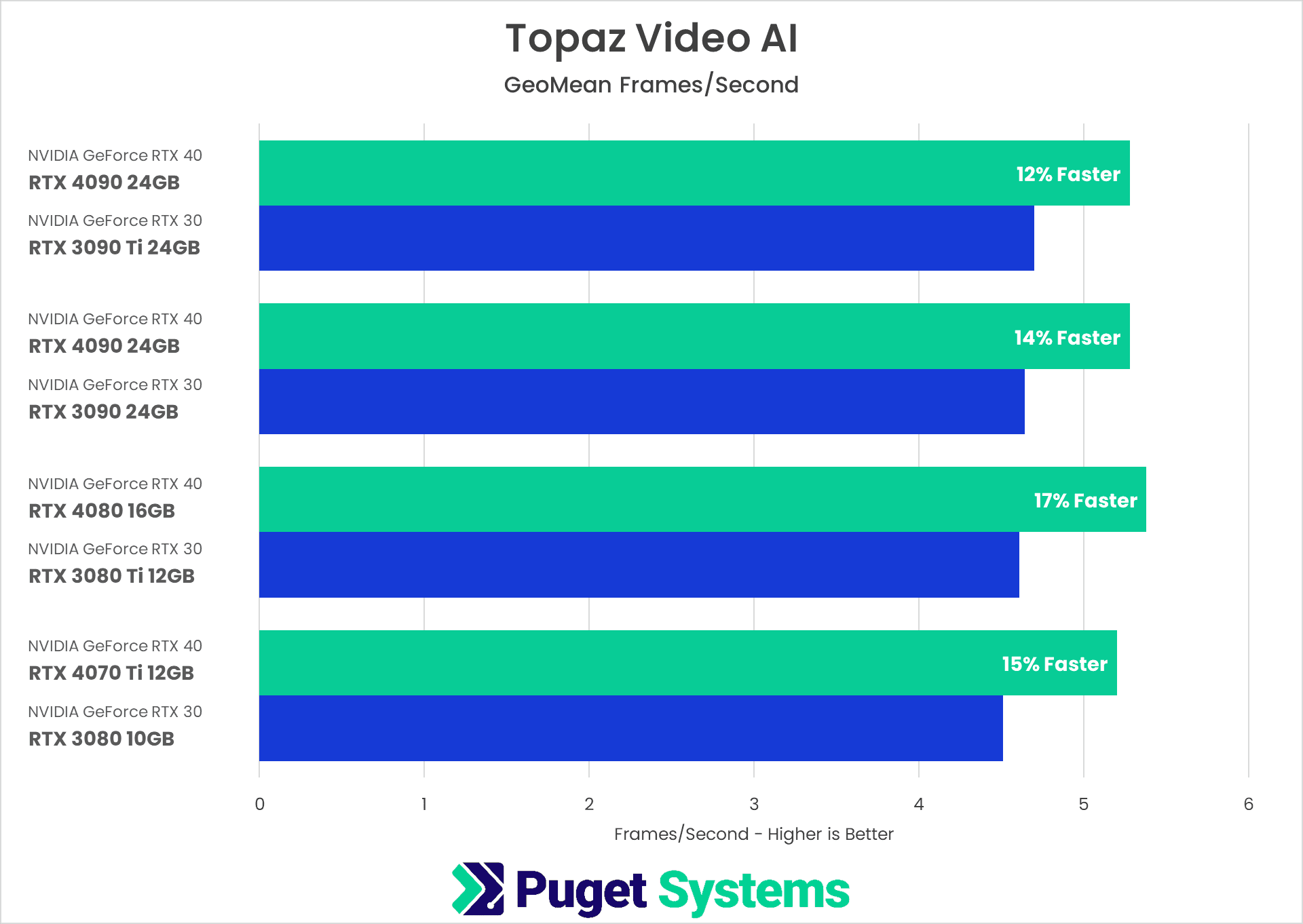

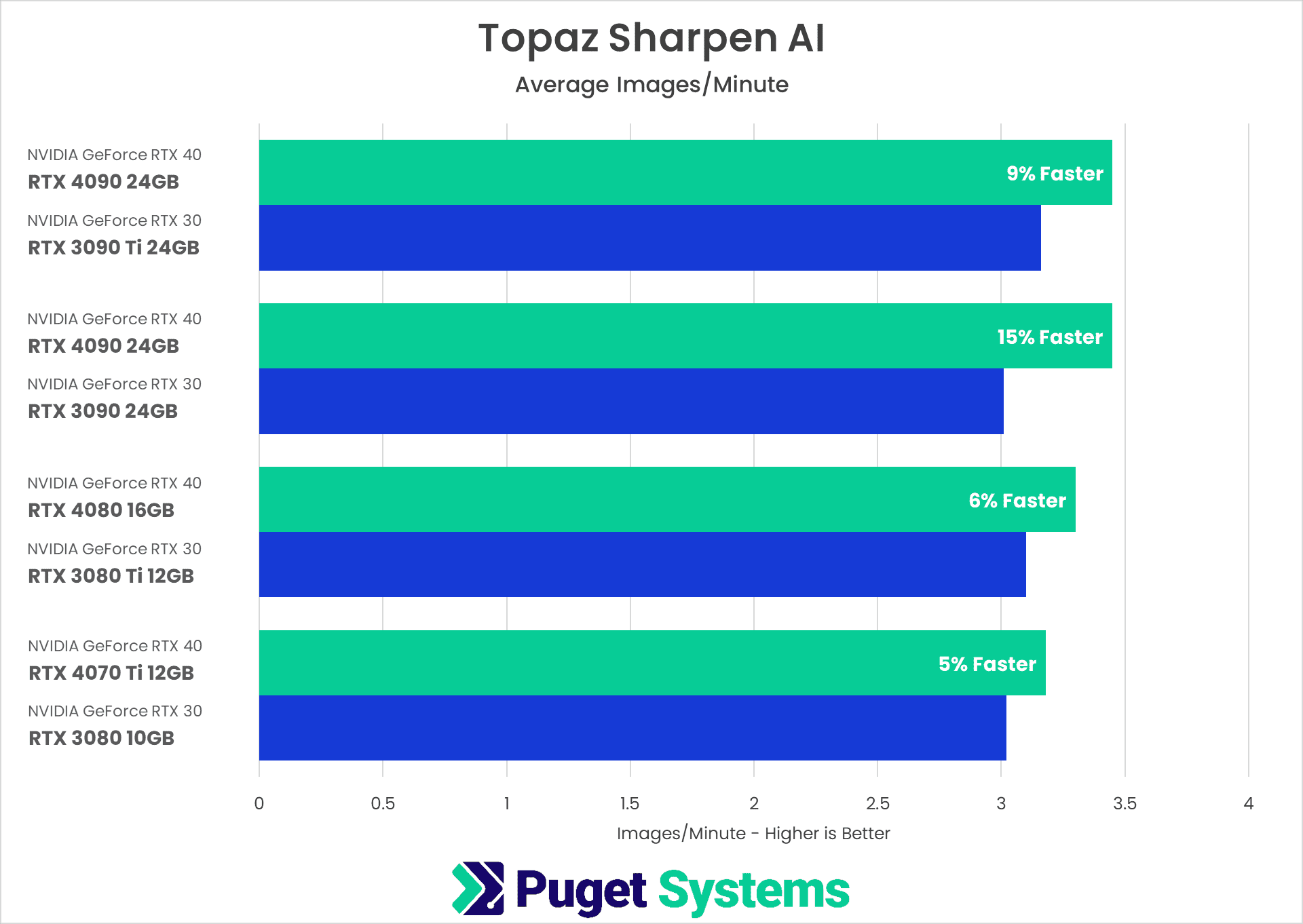

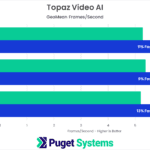

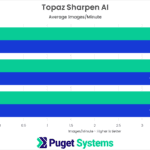

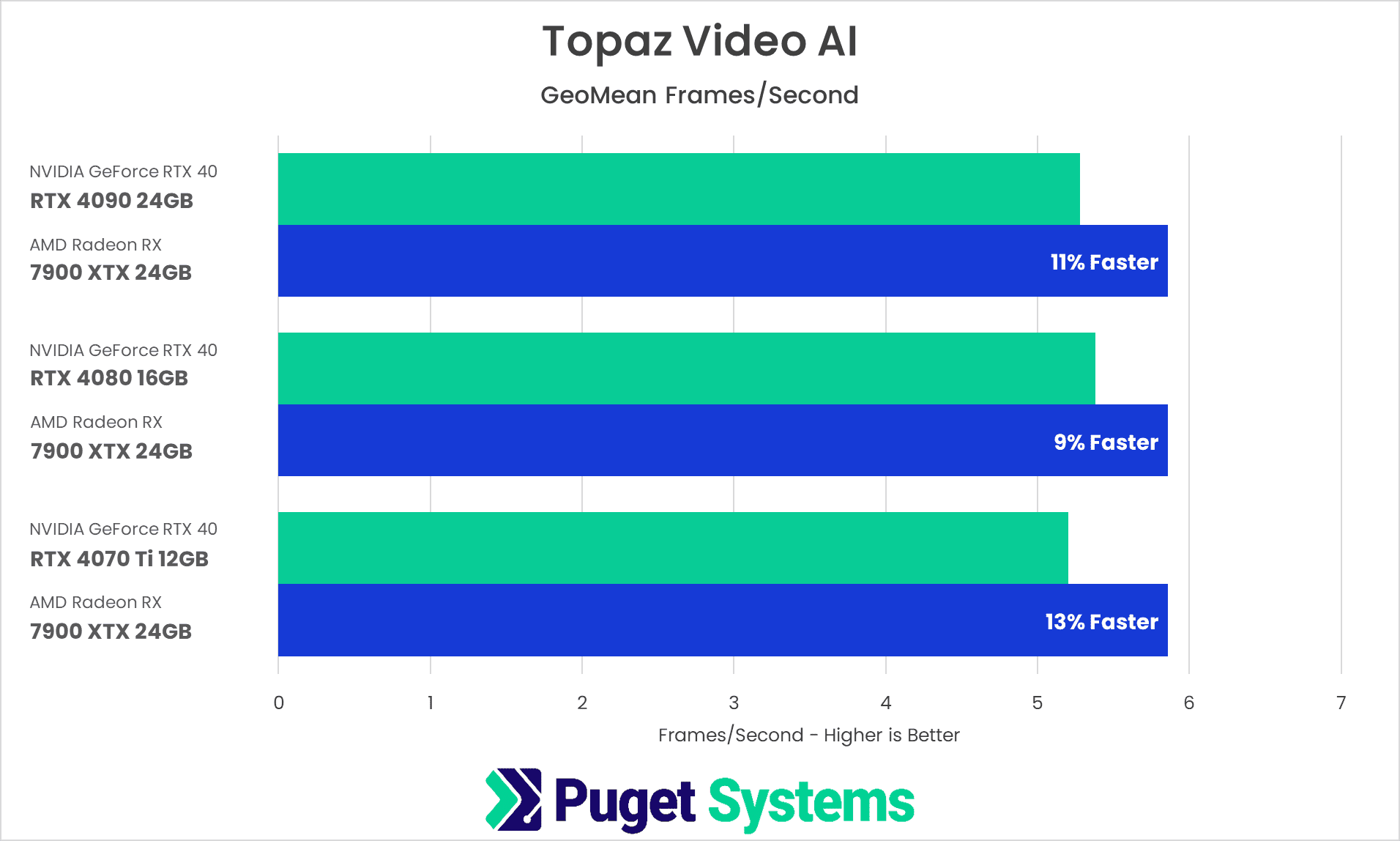

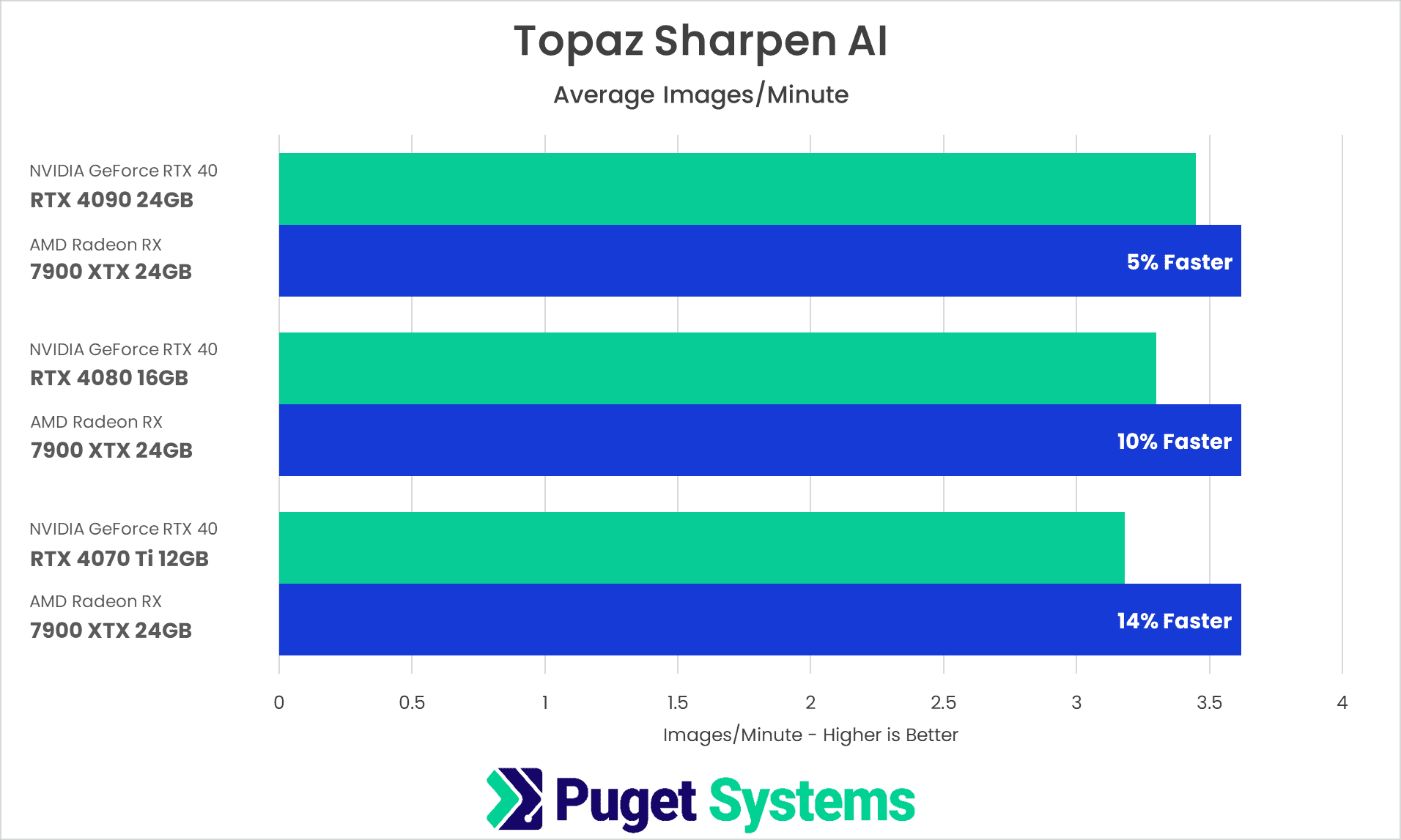

From an individual application perspective, we want to point out that Topaz Video AI (chart #2) makes much better use of more powerful GPUs than the other applications, with about a 15% gen-over-gen performance gain. Shapen AI (chart #3) comes in second place, with the RTX 4090 beating the RTX 3090 by another solid 15%, but the RTX 4080 and 4070 Ti only being about 5% faster than the RTX 380 Ti and RTX 3080, respectively.

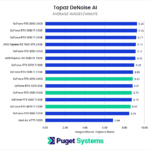

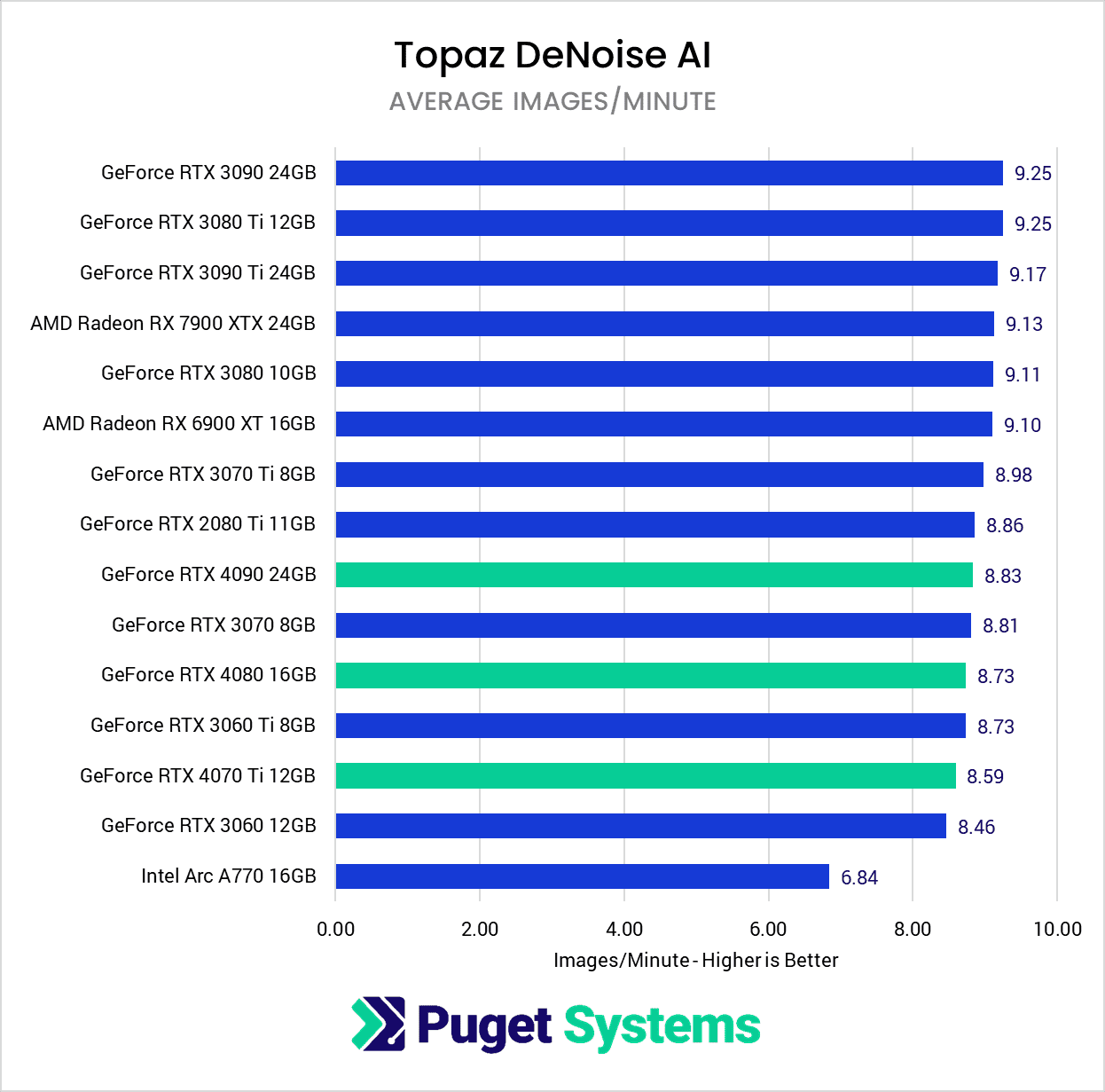

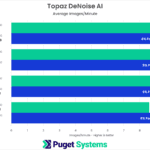

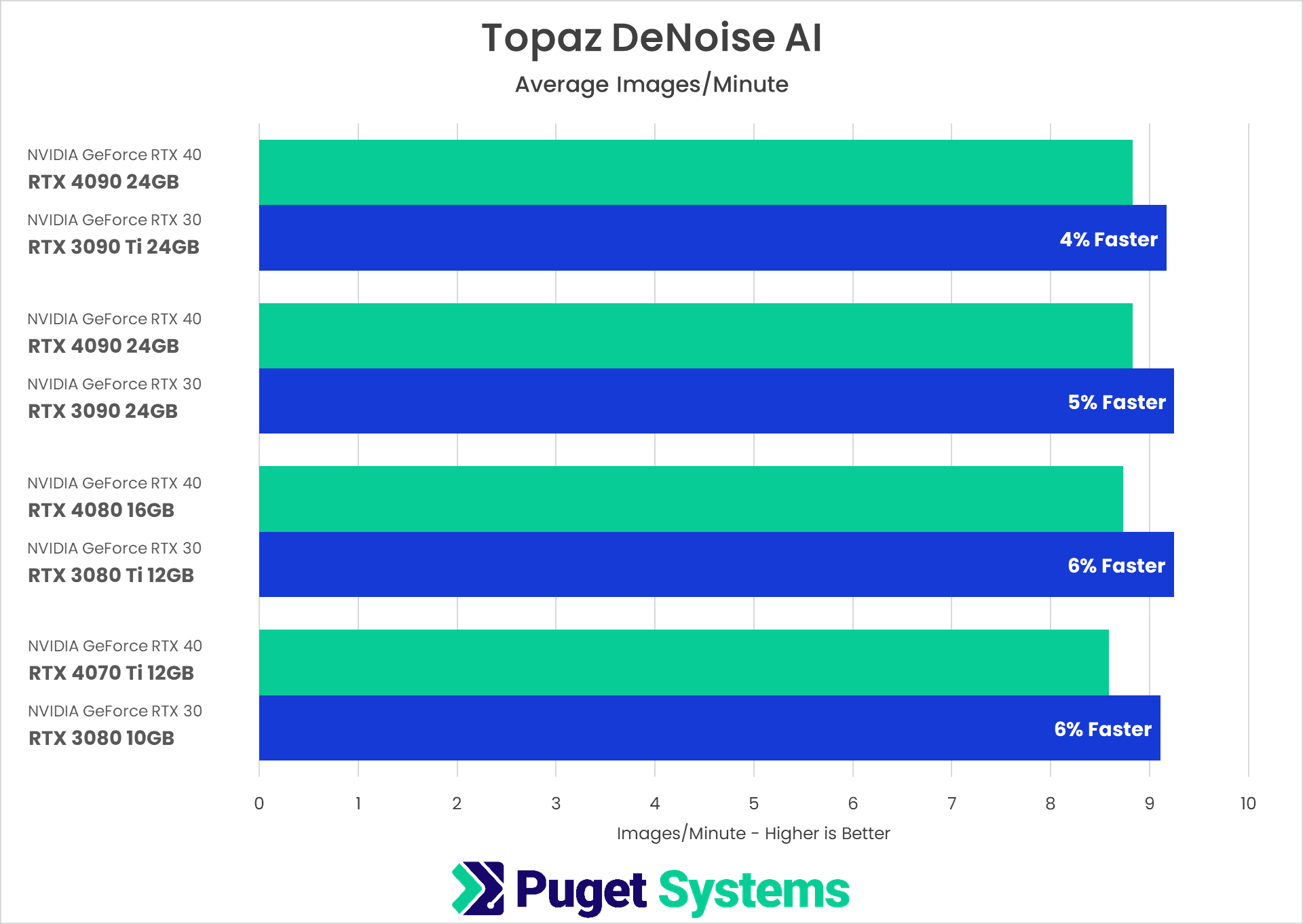

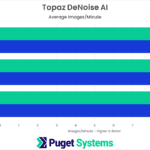

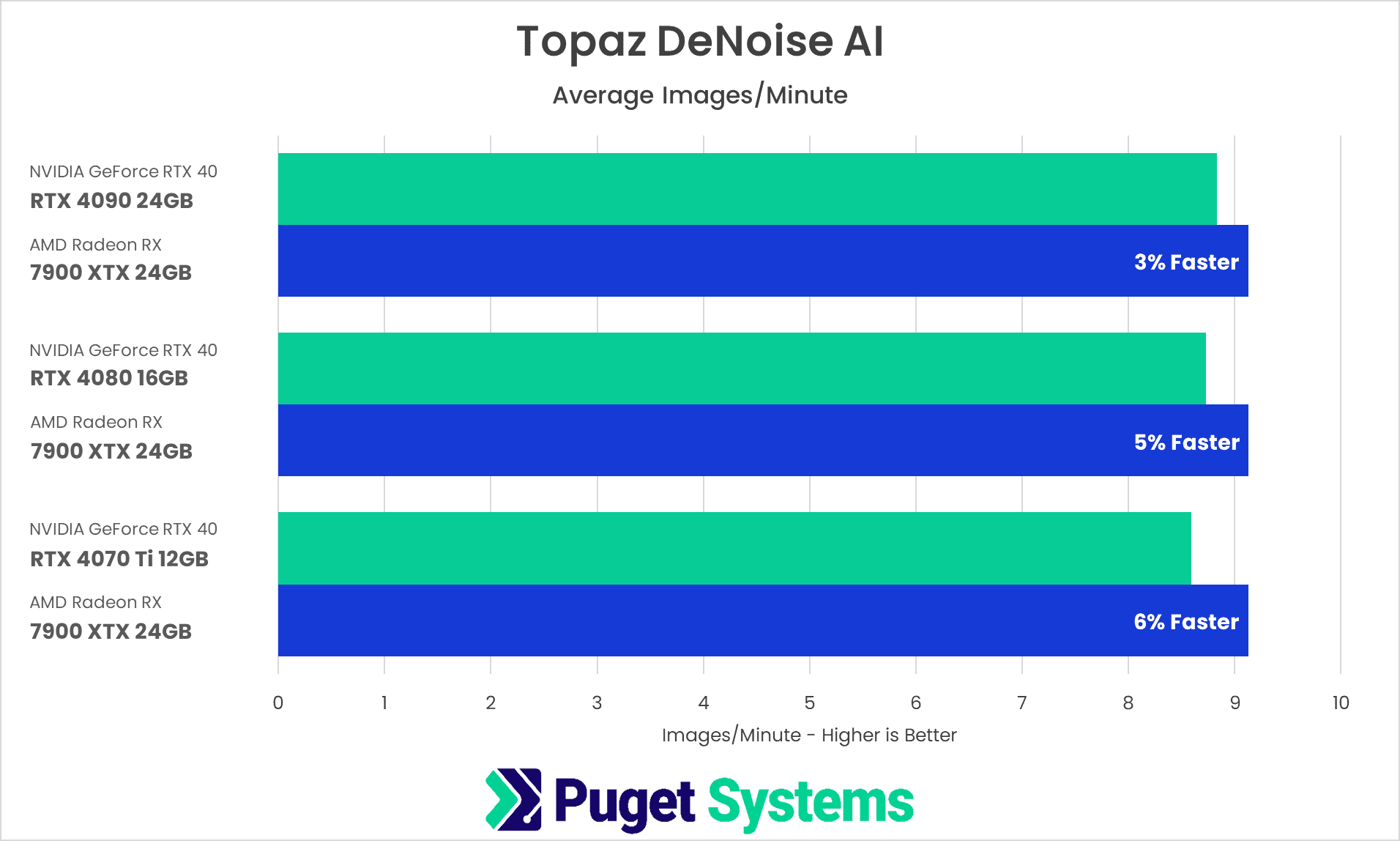

Topaz Gigapixel AI (chart #3) showed minimal gains with the RTX 40 Series, and Denoise AI (chart #4) was actually slower with the new GPUs. We are not sure why this would be the case, although we will again note that Topaz is constantly improving their applications. There may be specific RTX 40 Series optimizations that have not yet been implemented.

We do want to point out that this is only looking at a single-generation difference, which often isn’t going to be huge for these kinds of workloads. While we didn’t include it in this section’s charts, if we were to compare these GPUs to the RTX 2080 Ti (two generations back), the RTX 4080 is about 60% faster in applications like Topaz Video AI. In other words, while upgrading from an RTX 30 Series GPU to an RTX 40 Series probably isn’t worth it, if you have an older GPU, you could see a much more significant performance gain in some of these applications.

NVIDIA GeForce RTX 40 Series vs AMD Radeon RX

Unlike NVIDIA, AMD has a fairly slim GPU product line. They don’t have anything approaching the MSRP of the GeForce RTX 4090 and only have two models currently available for their Radeon 7000 Series: the Radeon RX 7900 XT and the Radeon RX 7900 XTX. For the purposes of this article, we are only going to include the Radeon RX 7900 XTX in this section. Just be aware that the 7900 XTX is $200 more expensive than the 4070 Ti, $200 less expensive than the RTX 4080, and far less expensive than the RTX 4090. Because of this, we will never be looking for an exact match regarding price/performance but rather how NVIDIA and AMD compare on a relative level.

Starting with the Overall Score, the relative performance between AMD and NVIDIA is pretty much… nothing. The 7900 XTX is slightly faster than the RTX 4070 Ti but is also more expensive. However, we will again note that the Overall Score is not as useful as it is for our other benchmarks, as the relative performance can swing wildly based on the individual application.

Drilling down into each application, Topaz Video AI (chart #2) and Sharpen AI (chart #4) are both big wins for AMD, with the Radeon 7900 XTX beating the NVIDIA GeForce RTX 40 Series GPUs by 9-13%. Denoise AI is another win for AMD, but by a smaller ~5%. On the other hand, Gigapixel AI (chart #2) is an even bigger win for NVIDIA, with the RTX 40 Series beating the Radeon 7900 XTX by anywhere from 22 to 26%.

AMD has a decent lead for most of these applications, with the exception of Gigapixel AI where NVIDIA has a much larger lead. These all balance out almost perfectly in the end, which is why the “Overall Score” we calculated based on performance across all Topaz AI applications was almost identical for AMD and NVIDA.

How Well Do the NVIDIA GeForce RTX 40 Series Perform in Topaz AI?

Overall, the NVIDIA GeForce RTX 40 Series GPUs do well in the Topaz AI suite, but their benefit highly depends on the specific application you are using. Topaz Video AI and Sharpen AI tend to give you the biggest performance gains when using a more powerful GPU, while Gigapixel and DeNoise AI appear to be more CPU limited.

If you already have an RTX 3080 or above, the new RTX 40 Series GPUs are likely not worth an upgrade for any of these applications. However, if your current GPU is an RTX 3070 or older model, you could see a decent performance increase – especially in Topaz Video and Sharpen AI.

The question of AMD vs NVIDIA is even more complicated. The RTX 40 Series GPUs have a terrific ~25% performance lead over the Radeon 7000 Series in Gigapixel AI, but AMD holds a smaller 5-14% lead in the other applications. On the whole, this washes out to almost identical performance between NVIDIA and AMD overall, but what it really means is that the faster GPU brand is going to highly depend on the specific Topaz AI applications you are using.

If you are looking for a workstation for Topaz AI with an NVIDIA GeForce RTX 40 Series GPU, we recommend checking out our Photoshop or Premiere Pro solutions page or contacting one of our technology consultants for help configuring a workstation that meets the specific needs of your unique workflow.